Speech keywords classifier

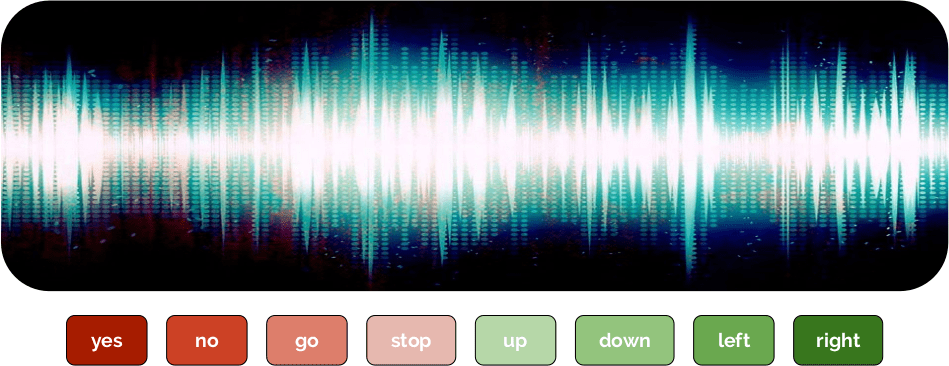

Train a speech classifier to classify audio files between different keywords.

Published by

DEEP-Hybrid-DataCloud Consortium

Created:

- Updated:

Model Description

This is a plug-and-play tool to train and evaluate a speech-to-text tool using deep neural networks. The network architecture is based on one of the tutorials provided by Tensorflow 1. The architecture used in this tutorial is based on some described in the paper Convolutional Neural Networks for Small-footprint Keyword Spotting 2.

There are lots of different approaches to building neural network models to work with audio including recurrent networks or dilated convolutions. This model is based on the kind of convolutional network that will feel very familiar to anyone who's worked with image recognition. That may seem surprising at first though, since audio is inherently a one-dimensional continuous signal across time, not a 2D spatial problem. We define a window of time we believe our spoken words should fit into, and converting the audio signal in that window into an image. This is done by grouping the incoming audio samples into short segments, just a few milliseconds long, and calculating the strength of the frequencies across a set of bands. Each set of frequency strengths from a segment is treated as a vector of numbers, and those vectors are arranged in time order to form a two-dimensional array. This array of values can then be treated like a single-channel image and is known as a spectrogram. These spectrograms will be the input for the training. The container does not come with any pretrained model, it has to be trained first on a dataset to be used for prediction.

The PREDICT method expects an audio files as input (or the url of an audio file) and will return a JSON with the top 5 predictions.

References

Test this module

You can test and execute this module in various ways.

Excecute locally on your computer using Docker

You can run this module directly on your computer, assuming that you have Docker installed, by following these steps:

$ docker pull deephdc/deep-oc-speech-to-text-tf

$ docker run -ti -p 5000:5000 deephdc/deep-oc-speech-to-text-tfExecute on your computer using udocker

If you do not have Docker available or you do not want to install it, you can use udocker within a Python virtualenv:

$ virtualenv udocker

$ source udocker/bin/activate

(udocker) $ pip install udocker

(udocker) $ udocker pull deephdc/deep-oc-speech-to-text-tf

(udocker) $ udocker create deephdc/deep-oc-speech-to-text-tf

(udocker) $ udocker run -p 5000:5000 deephdc/deep-oc-speech-to-text-tf

In either case, once the module is running, point your browser to

http://127.0.0.1:5000/ and you will see the API

documentation, where you can test the module functionality, as well as

perform other actions (such as training).

For more information, refer to the user documentation.

Train this module

You can train this model using the DEEP framework. In order to execute this module in our pilot e-Infrastructure you would need to be registered in the DEEP IAM.

Once you are registedered, you can go to our training dashboard to configure and train it.

For more information, refer to the user documentation.