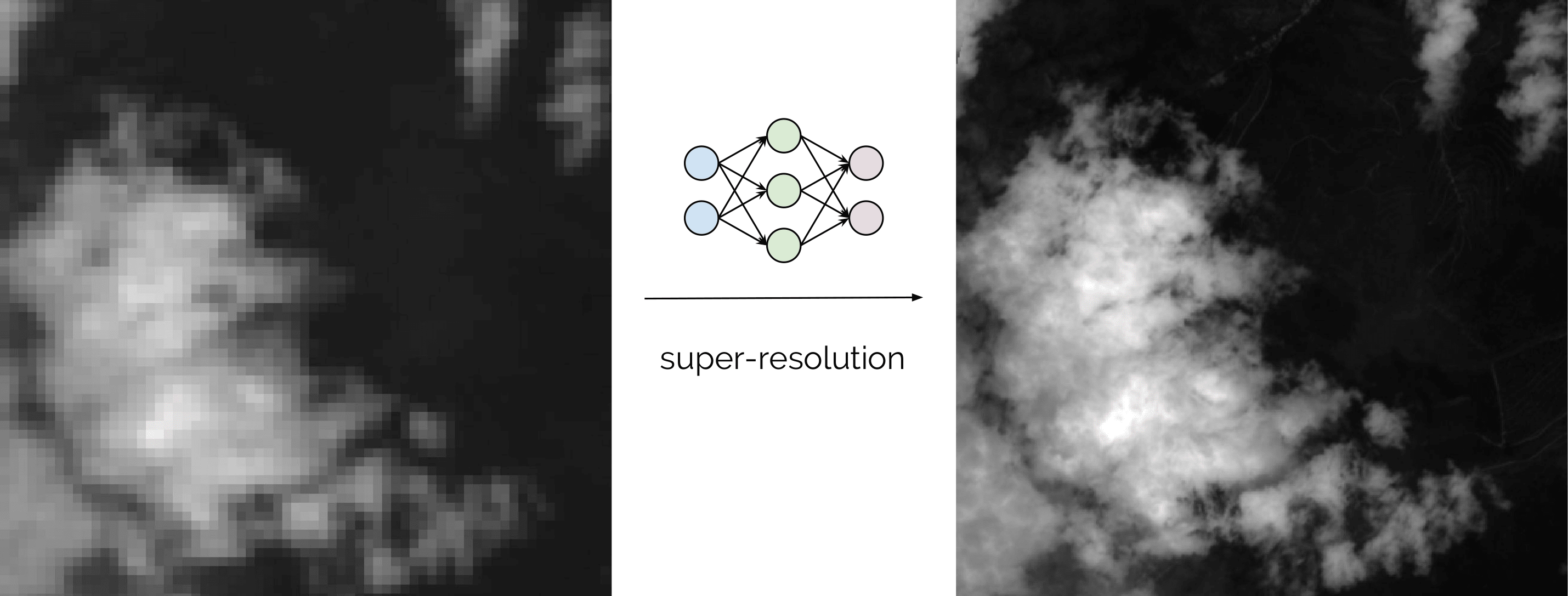

Upscale multispectral satellites images

Upscale (superresolve) low resolution bands to high resolution in multispectral satellite imagery.

Model | Trainable | Inference | Pre-trained

Published by

DEEP-Hybrid-DataCloud Consortium

Created:

- Updated:

Model Description

With the latest missions launched by the European Space Agency (ESA) and National Aeronautics and Space Administration (NASA) equipped with the latest technologies in multi-spectral sensors, we face an unprecedented amount of data with spatial and temporal resolutions never reached before. Exploring the potential of this data with state-of-the-art AI techniques like Deep Learning, could potentially change the way we think about and protect our planet's resources.

This Docker container contains a plug-and-play tool to perform super-resolution on satellite imagery. It uses Deep Learning to provide a better performing alternative to classical pansharpening (more details in [1]).

Minimum requirements Working with satellite imagery is a memory intensive task, so an absolute minimum is 16 GB of RAM memory. But if you want to work with full images (not small patches) you will probably need in the order of 50 GB. If memory requirements are not met, weird Tensorflow shape errors can appear.

The PREDICT method expects a compressed file (zip or tar) containing a complete tile of the satellite. These tiles are different for each satellite type and can be downloaded in the respective official satellite's repositories. We provide nevertheless some samples for each satellite so that the user can test the module. The output is a GeoTiff file with the super-resolved region.

Right now we are supporting super-resolution for the following satellites:

More information on the satellites and processing levels that are supported can be found here along with some demo images of the super-resolutions performed in non-training data.

If you want to perform super-resolution on another satellite, go to the training section to see how you can easily add support for additional satellites. We are happy to accept PRs!

References

[1]: Lanaras, C., Bioucas-Dias, J., Galliani, S., Baltsavias, E., & Schindler, K. (2018). Super-resolution of Sentinel-2 images: Learning a globally applicable deep neural network. ISPRS Journal of Photogrammetry and Remote Sensing, 146, 305-319.

Test this module

You can test and execute this module in various ways.

Excecute locally on your computer using Docker

You can run this module directly on your computer, assuming that you have Docker installed, by following these steps:

$ docker pull deephdc/deep-oc-satsr

$ docker run -ti -p 5000:5000 deephdc/deep-oc-satsrExecute on your computer using udocker

If you do not have Docker available or you do not want to install it, you can use udocker within a Python virtualenv:

$ virtualenv udocker

$ source udocker/bin/activate

(udocker) $ pip install udocker

(udocker) $ udocker pull deephdc/deep-oc-satsr

(udocker) $ udocker create deephdc/deep-oc-satsr

(udocker) $ udocker run -p 5000:5000 deephdc/deep-oc-satsr

In either case, once the module is running, point your browser to

http://127.0.0.1:5000/ and you will see the API

documentation, where you can test the module functionality, as well as

perform other actions (such as training).

For more information, refer to the user documentation.

Train this module

You can train this model using the DEEP framework. In order to execute this module in our pilot e-Infrastructure you would need to be registered in the DEEP IAM.

Once you are registedered, you can go to our training dashboard to configure and train it.

For more information, refer to the user documentation.