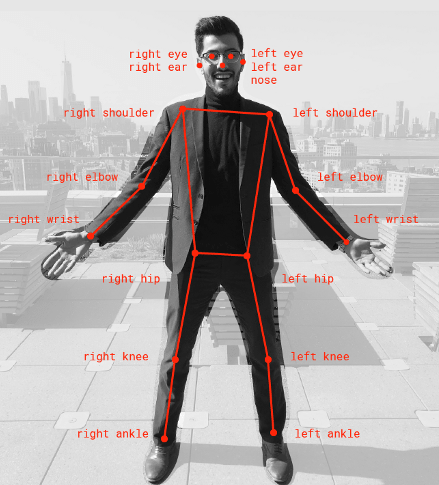

Body pose detection

Detect body poses in images.

Model | Inference | Pre-trained

Published by

DEEP-Hybrid-DataCloud Consortium

Created:

- Updated:

Model Description

This is a plug-and-play tool for real-time pose estimation using deep neural networks. The original model, weights, code, etc. was created by Google and can be found at https://github.com/tensorflow/tfjs-models/tree/master/posenet.

PoseNet can be used to estimate either a single pose or multiple poses, meaning there is a version of the algorithm that can detect only one person in an image/video and another version that can detect multiple persons in an image/video.

The PREDICT method expects an RGB image as input (or the url of an image) and returns as output the different body keypoints with the corresponding coordinates and the associated key score

Test this module

You can test and execute this module in various ways.

Excecute locally on your computer using Docker

You can run this module directly on your computer, assuming that you have Docker installed, by following these steps:

$ docker pull deephdc/deep-oc-posenet-tf

$ docker run -ti -p 5000:5000 deephdc/deep-oc-posenet-tfExecute on your computer using udocker

If you do not have Docker available or you do not want to install it, you can use udocker within a Python virtualenv:

$ virtualenv udocker

$ source udocker/bin/activate

(udocker) $ pip install udocker

(udocker) $ udocker pull deephdc/deep-oc-posenet-tf

(udocker) $ udocker create deephdc/deep-oc-posenet-tf

(udocker) $ udocker run -p 5000:5000 deephdc/deep-oc-posenet-tf

In either case, once the module is running, point your browser to

http://127.0.0.1:5000/ and you will see the API

documentation, where you can test the module functionality, as well as

perform other actions (such as training).

For more information, refer to the user documentation.