Object Detection and Classification with Pytorch

A trained Region Convolutional Neural Network (Faster RCNN) for object detection and classification.

Model | Trainable | Inference | Pre-trained

Published by

DEEP-Hybrid-DataCloud Consortium

Created:

- Updated:

Model Description

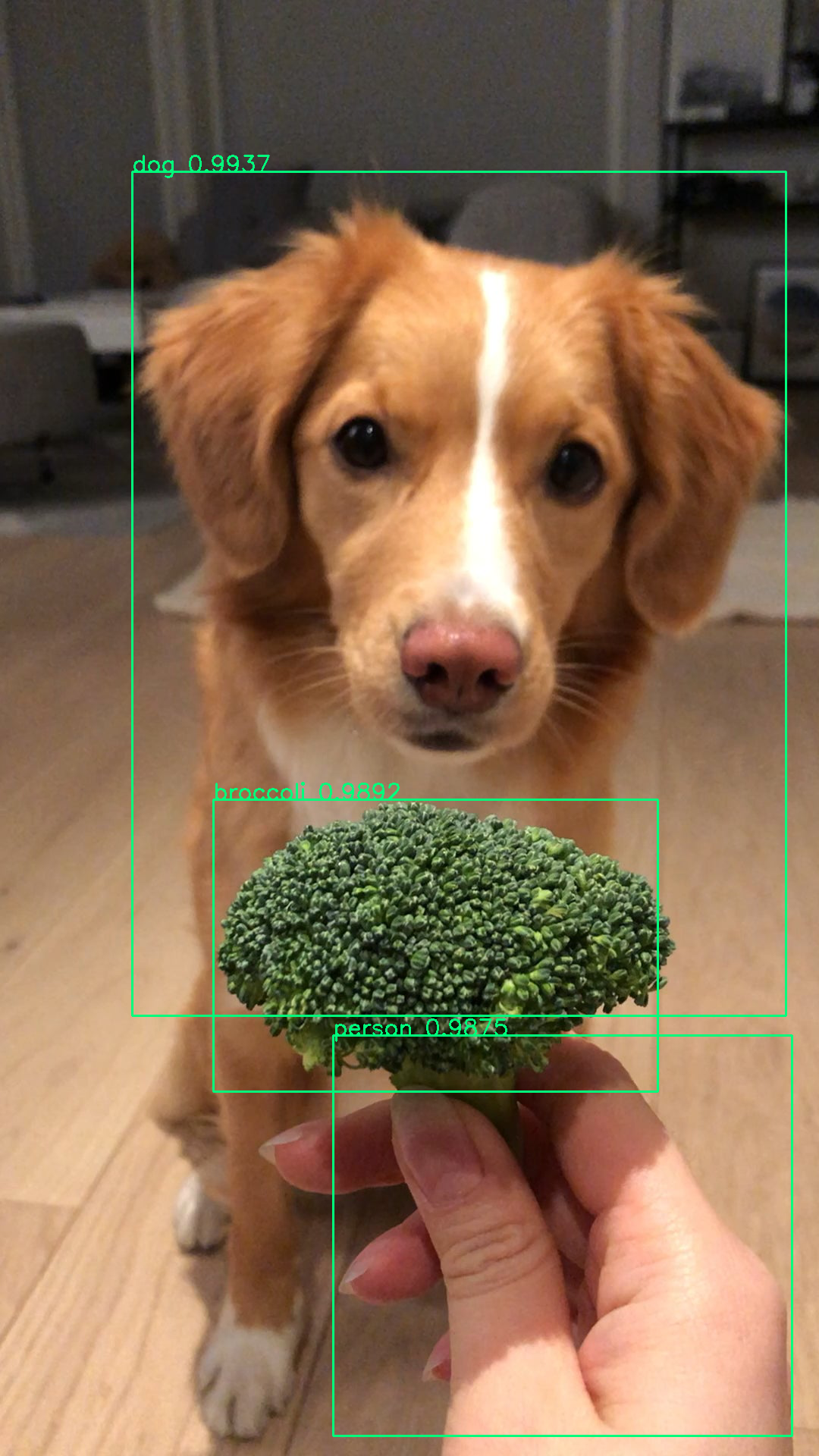

This is a plug-and-play tool for object detection and classification using deep neural networks (Faster R-CNN ResNet-50 FPN Architecture [1]) that were already pretrained on the COCO Dataset. The code uses the Pytorch Library, more information can be found at Pytorch-Object-Detection.

The PREDICT method expects an image as input and will return a JSON with the predictions that are greater than the probability threshold. Let's say you have an image of a cat and a dog together and the probability output was 50% a dog and 80% a cat, if you set the threshold to 70%, the only detected object will be the cat, because its probability is grater than 70%.

This module works on uploaded images and gives as output the rectangle coordinates x1,y1 and x2,y2 were the classified object is located. It also provides you the probability of the classified detected object.

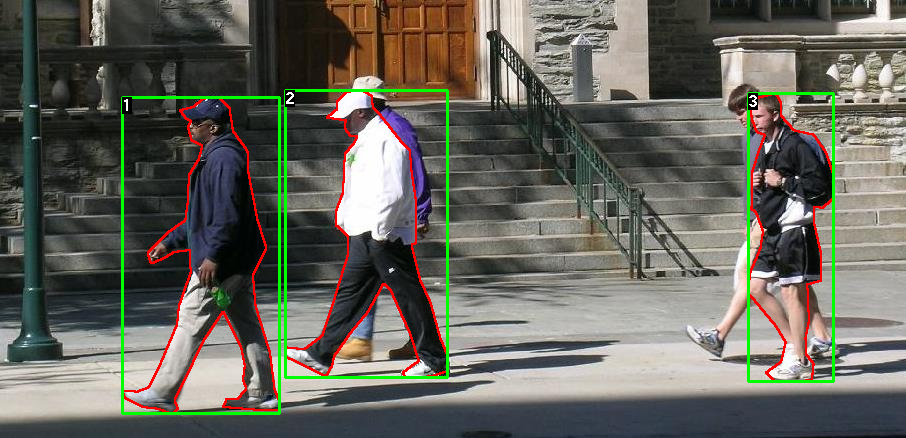

The TRAINING method uses transfer learning and expects different parameters like learning rate, name of the clases, etc. Transfer learning focuses on storing knowledge gained while solving one problem and applying it to a different but related problem. To achieve it, the output layer of the pre-trained model is removed and a new one with the new number of outputs is added. Only that new layer will be trained [1]. An example of transferred learning is provided an implemented in this module.

The model requires a new dataset with the classes that are going to be classified and detected. In this case the Penn-Fudan Database for Pedestrian Detection and Segmentation was used to detect pedestrians.

To try this in the module, the two dataset folders (Images and Masks) must be placed in the obj_detect_pytorch/dataset/ folder. More information about the code, the Penn Fudan Dataset and the structuring of a custom dataset can be found at Torchvision Object Detection Finetuning.

References

[1]: Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks arXiv preprint (2016): 1506-01497.

[2]: West Jeremy, Ventura Dan, Warnick Sean. Spring Research Presentation: A Theoretical Foundation for Inductive Transfer, Brigham Young University, College of Physical and Mathematical Sciences. (2007).

Test this module

You can test and execute this module in various ways.

Excecute locally on your computer using Docker

You can run this module directly on your computer, assuming that you have Docker installed, by following these steps:

$ docker pull deephdc/deep-oc-obj_detect_pytorch

$ docker run -ti -p 5000:5000 deephdc/deep-oc-obj_detect_pytorchExecute on your computer using udocker

If you do not have Docker available or you do not want to install it, you can use udocker within a Python virtualenv:

$ virtualenv udocker

$ source udocker/bin/activate

(udocker) $ pip install udocker

(udocker) $ udocker pull deephdc/deep-oc-obj_detect_pytorch

(udocker) $ udocker create deephdc/deep-oc-obj_detect_pytorch

(udocker) $ udocker run -p 5000:5000 deephdc/deep-oc-obj_detect_pytorch

In either case, once the module is running, point your browser to

http://127.0.0.1:5000/ and you will see the API

documentation, where you can test the module functionality, as well as

perform other actions (such as training).

For more information, refer to the user documentation.

Train this module

You can train this model using the DEEP framework. In order to execute this module in our pilot e-Infrastructure you would need to be registered in the DEEP IAM.

Once you are registedered, you can go to our training dashboard to configure and train it.

For more information, refer to the user documentation.