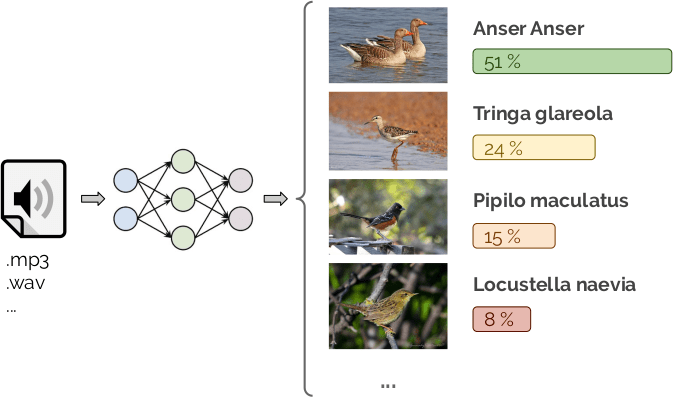

Bird sound classifier

Classify audio files among bird species from the Xenocanto dataset.

Model | Trainable | Inference | Pre-trained

Published by

DEEP-Hybrid-DataCloud Consortium

Created:

- Updated:

Model Description

CAUTION: This module is in a development stage. Predictions might still not be reliable enough.

This is a plug-and-play tool to perform bird sound classification with Deep Learning. The PREDICT method expects an audio file as input (or the url of a audio file) and will return a JSON with the top 5 predictions. Most audio file formats are supported (see FFMPEG compatible formats).

We use the Xenocanto dataset [1] which has around 350K samples covering 10K species. As an initial assessment we have trained the model on the 73 most popular species, which account for roughly 20% of all observations.

This service is based in the Audio Classification with Tensorflow model.

References

[1]: Vellinga W (2019). Xeno-canto - Bird sounds from around the world. Xeno-canto Foundation for Nature Sounds. Occurrence dataset https://doi.org/10.15468/qv0ksn accessed via GBIF.org on 2019-10-25.

Test this module

You can test and execute this module in various ways.

Excecute locally on your computer using Docker

You can run this module directly on your computer, assuming that you have Docker installed, by following these steps:

$ docker pull deephdc/deep-oc-birds-audio-classification-tf

$ docker run -ti -p 5000:5000 deephdc/deep-oc-birds-audio-classification-tfExecute on your computer using udocker

If you do not have Docker available or you do not want to install it, you can use udocker within a Python virtualenv:

$ virtualenv udocker

$ source udocker/bin/activate

(udocker) $ pip install udocker

(udocker) $ udocker pull deephdc/deep-oc-birds-audio-classification-tf

(udocker) $ udocker create deephdc/deep-oc-birds-audio-classification-tf

(udocker) $ udocker run -p 5000:5000 deephdc/deep-oc-birds-audio-classification-tf

In either case, once the module is running, point your browser to

http://127.0.0.1:5000/ and you will see the API

documentation, where you can test the module functionality, as well as

perform other actions (such as training).

For more information, refer to the user documentation.

Train this module

You can train this model using the DEEP framework. In order to execute this module in our pilot e-Infrastructure you would need to be registered in the DEEP IAM.

Once you are registedered, you can go to our training dashboard to configure and train it.

For more information, refer to the user documentation.